Using Million Song Dataset In Hadoop

April 19, 2014

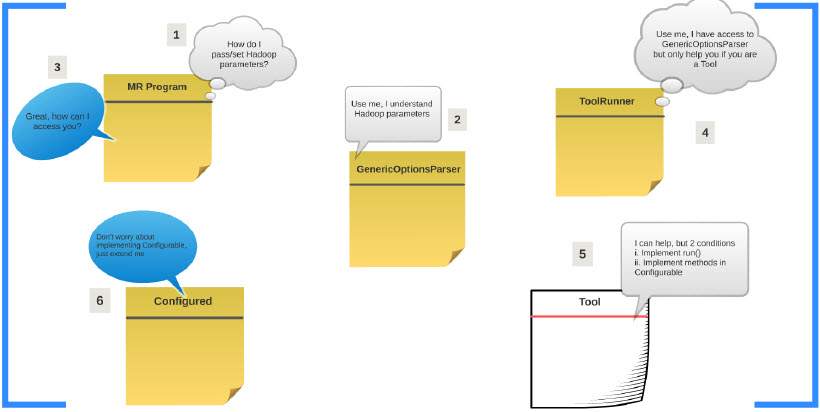

Explaining ToolRunner

June 18, 2014

This post explains how to unit test a MapReduce program using MRUnit. Apache MRUnit ™ is a Java library that helps developers unit test Apache Hadoop map reduce jobs.

The example used in the post looks at the Weather dataset and work with the year and temperature extracted from the data. Obviously the example can be easily translated for your specific data.

Preparing your environment

Download MRUnit from http://mirror.reverse.net/pub/apache/mrunit/mrunit-1.0.0 and add the libraries under MRUnit’s lib directory to your CLASSPATH.

Preparing Test

We will initialize the Mapper and Reducer classes. MapReduceDriver specification list out the Map input key – value, Map output key – value, Reduce output key – value types. In the setup(), set the Mapper and Reducer classes on the MapReduceDriver.

//Specification of Mapper

MapDriver<LongWritable, Text, Text, IntWritable> mapDriver;

//Specification of Reduce

ReduceDriver<Text, IntWritable, Text, IntWritable> reduceDriver;

//Specification of MapReduce program

MapReduceDriver<LongWritable, Text, Text, IntWritable, Text, IntWritable> mapReduceDriver;

@Before

public void setUp() {

MaxTemperatureMapper mapper = new MaxTemperatureMapper();

MaxTemperatureReducer reducer = new MaxTemperatureReducer();

//Setup Mapper

mapDriver = MapDriver.newMapDriver(mapper);

//Setup Reduce

reduceDriver = ReduceDriver.newReduceDriver(reducer);

//Setup MapReduce job

mapReduceDriver = MapReduceDriver.newMapReduceDriver(mapper, reducer);

}

Testing Mapper

Pass the input key and input value of the Mapper to mapDriver.withInput and pass the expected output key and output value from the Mapper to mapDriver.withOutput for the given input key and input value.

mapDriver.runTest() will run the configured Mapper with the input and the expected output. If the output from the Mapper for the given input is same as the provided output the test will report a success or it will fail.

@Test

public void testMapper() {

//Test Mapper with this input

mapDriver.withInput(new LongWritable(), new Text(

"0029029070999991901010106004+64333+023450FM-12+000599999V0202701N015919999999N0000001N9-00781+99999102001ADDGF108991999999999999999999"));

//Expect this output

mapDriver.withOutput(new Text("1901"), new IntWritable(-78));

//Test Mapper with this input

mapDriver.withInput(new LongWritable(), new Text(

"0029029810999991901050720004+59500+020350FM-12+002699999V0201101N003119999999N0000001N9+00111+99999101281ADDGF107991999999999999999999"));

//Expect this output

mapDriver.withOutput(new Text("1901"), new IntWritable(11));

try {

//Run Map test with above input and ouput

mapDriver.runTest();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

Testing Reducer

Similar to the Mapper test, we will specify the input and the expected output for the reducer using reduceDriver.withInput and reduceDriver.withOutput . If the output from the Reducer for the given input is same as the provided output the test will report a success or it will fail.

@Test

public void testReducer() {

List<IntWritable> values = new ArrayList<IntWritable>();

values.add(new IntWritable(101));

values.add(new IntWritable(31));

//Run Reduce with this input

reduceDriver.withInput(new Text("1901"), values);

//Expect this output

reduceDriver.withOutput(new Text("1901"), new IntWritable(101));

try {

//Run Reduce test with above input and ouput

reduceDriver.runTest();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

Complete Program

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mrunit.mapreduce.MapDriver;

import org.apache.hadoop.mrunit.mapreduce.MapReduceDriver;

import org.apache.hadoop.mrunit.mapreduce.ReduceDriver;

import org.junit.Before;

import org.junit.Test;

import com.hirw.maxtemperature.MaxTemperatureMapper;

import com.hirw.maxtemperature.MaxTemperatureReducer;

public class MaxTemperatureTest {

//Specification of Mapper

MapDriver<LongWritable, Text, Text, IntWritable> mapDriver;

//Specification of Reduce

ReduceDriver<Text, IntWritable, Text, IntWritable> reduceDriver;

//Specification of MapReduce program

MapReduceDriver<LongWritable, Text, Text, IntWritable, Text, IntWritable> mapReduceDriver;

@Before

public void setUp() {

MaxTemperatureMapper mapper = new MaxTemperatureMapper();

MaxTemperatureReducer reducer = new MaxTemperatureReducer();

//Setup Mapper

mapDriver = MapDriver.newMapDriver(mapper);

//Setup Reduce

reduceDriver = ReduceDriver.newReduceDriver(reducer);

//Setup MapReduce job

mapReduceDriver = MapReduceDriver.newMapReduceDriver(mapper, reducer);

}

@Test

public void testMapper() {

//Test Mapper with this input

mapDriver.withInput(new LongWritable(), new Text(

"0029029070999991901010106004+64333+023450FM-12+000599999V0202701N015919999999N0000001N9-00781+99999102001ADDGF108991999999999999999999"));

//Expect this output

mapDriver.withOutput(new Text("1901"), new IntWritable(-78));

//Test Mapper with this input

mapDriver.withInput(new LongWritable(), new Text(

"0029029810999991901050720004+59500+020350FM-12+002699999V0201101N003119999999N0000001N9+00111+99999101281ADDGF107991999999999999999999"));

//Expect this output

mapDriver.withOutput(new Text("1901"), new IntWritable(11));

try {

//Run Map test with above input and ouput

mapDriver.runTest();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

@Test

public void testReducer() {

List<IntWritable> values = new ArrayList<IntWritable>();

values.add(new IntWritable(101));

values.add(new IntWritable(31));

//Run Reduce with this input

reduceDriver.withInput(new Text("1901"), values);

//Expect this output

reduceDriver.withOutput(new Text("1901"), new IntWritable(101));

try {

//Run Reduce test with above input and ouput

reduceDriver.runTest();

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}